Analogue-Domain Machine Learning Computations Exploiting 2D Materials Properties

Nathaniel Tye, Apollo - University of Cambridge Repository, 2022

Abstract

For several decades, digital complimentary metal-oxide-semiconductor (CMOS) has been the dominant technology in computer hardware. This is a result of its abundance, as well as material properties, including stable native oxide formation, and ability to be miniaturised. However, new application classes and the physical limitations of traditional digital CMOS have triggered a wave of research into alternative materials and devices for computing applications.

Analysing and identifying trends is vital for guiding new ideas and research. This thesis establishes the context of the work by first exploring trends in integrated circuit (IC) devel- opment. This thesis shows that the rapid growth of machine learning (ML) has resulted in a significant increase in the number of ML accelerators. However, many of these accelerators rely on conventional CMOS technology and so are subject to the same issues of scalability, a limitation known as the accelerator wall. Thus, new materials and devices are of interest. The thesis then discusses how Graphene and related materials (GRMs) in particular may help overcome this wall and facilitate continued progress in the development of computer hardware.

To establish the state of the art, this thesis examines several candidate technologies for future computer devices. Despite an intense research focus in recent years, very few novel devices and materials for computation have seen large-scale commercialisation. This thesis explores the reasons for this and examines successful examples of novel devices and materials being applied as solutions to computational problems. This demonstrates that early-stage mapping of computational problems to devices and materials properties has the potential for a significant impact, providing a guiding principle for the subsequent work in the thesis.

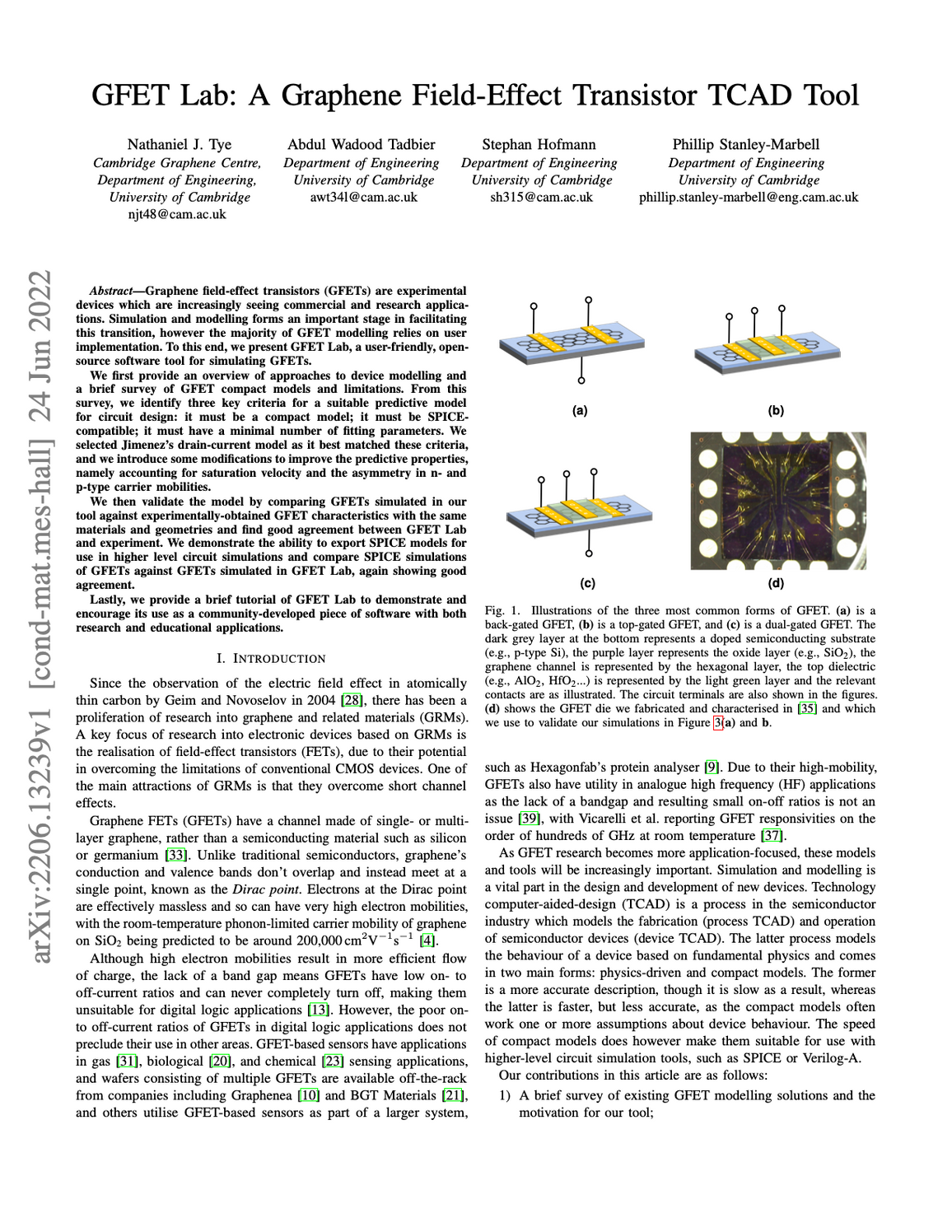

The thesis then investigates graphene field-effect transistors (GFETs), a type of field- effect transistor with a channel made of graphene, for computational applications. It explores different GFET models and describes the development of a stand-alone software tool which allows for different GFET structures and geometries to be easily explored and their electrical characteristics simulated. Validating the simulated characteristics against experimentally- obtained characteristics for several GFET geometries provides evidence for the the model’s utility in circuit design. This forms an integral part of subsequent work, guiding the development of a mixed-signal architecture for analogue function approximation. This uses the nonlinear characteristics of GFETs as basis functions to approximate some arbitrary target function, demonstrating a direct mapping of a computational problem to device properties.

As an application of the mixed-signal architecture, this thesis explores the problem of non-uniform random number generation. Following preliminary work, the thesis presents the development of an end-to-end approximation scheme, building up from mathematical simulations to circuit-level simulations to an experimental demonstration of non-uniform random number generation using the architecture, with advantages over traditional software approaches in areas of speed, energy efficiency, flexibility, and material costs. The experiments show the approximation uses less power and suggests an average speedup of 2×, with a reasonable level of accuracy to generate 10⁶ non-uniform samples, compared to the state of the art, although further investigation is necessary to quantify this.

Given the growing prevalence of ML, the thesis lastly examines the potential of the mixed- signal architecture for accelerating ML computations. Examining execution-time breakdowns of contemporary ML problems suggests that accelerating artificial neural network activation functions could have significant impact. The thesis applies the function approximation scheme to activation functions via simulations and suggests comparable levels of accuracy to a pure software approach. The thesis then presents an end-to-end demonstration of activation function approximation applied to a convolutional neural network from the literature. This approximation suggests an average speedup of 3.55× compared to a PyTorch implementation running on a conventional central processing unit (CPU).

Cite as:

Tye, N. (2022). Analog-Domain Machine Learning Computations Exploiting 2D Materials Properties [Apollo - University of Cambridge Repository]. https://doi.org/10.17863/CAM.98897

BibTeX:

@phdthesis{tye_2022,

title={Analog-Domain Machine Learning Computations Exploiting 2D Materials Properties},

url={https://www.repository.cam.ac.uk/handle/1810/352702},

DOI={10.17863/CAM.98897},

school={Apollo - University of Cambridge Repository},

author={Tye, Nathaniel},

year={2022},

keywords={2D Materials, Graphene, Machine learning, Random Number}

}